AI integration isn’t a feature. It’s a capability.

Nowadays every organization wants to integrate AI. Whether it’s into products, operations, or decision loops- they want to get that AI advantage.

But there’s a growing gap between intent and impact. Building a proof-of-concept is relatively easy but integrating AI into the DNA of business is where most team struggle. That’s because many treat AI as a feature. A chatbot here, a recommendation engine there. These projects may show early results, but they rarely scale.

In reality, AI is a capability- one that must be architected into the fabric of your business. It sits at the intersection of data, infrastructure, workflows, and human context. Without alignment across these layers, AI becomes just another disconnected tool in the stack.

The teams that succeed with integrating AI think differently. They treat AI as a horizontal capability, not a vertical feature. They build AI into the architecture, not just app. They understand that AI integration isn’t about “adding intelligence” it is about designing systems that learn, adapt, and improve over time.

At Talentica, we have helped both startups and enterprises – Integrating AI into real-world products – from compliance automation, intelligent document parsing, boosting revenue generation, AI-enhanced personalization, and more. This article distills what we have learned while integrating AI and how your team can get it right.

Before you integrate – Are you ready for AI?

Most companies skip this question. That’s mistake #1.

Successful AI integration isn’t about “can we train a model?” It’s about whether the model can live inside your product and operations without breaking things. If your data is fragmented, your workflows are brittle, or your infrastructure is not flexible, AI won’t fix that. It will magnify it.

Before you even write your first model prompt or fine-tune your first LLM, you need to ask:

Do you have the right data and enough of it?

AI runs data, but not all data is created equal.

- Do you have clean, labeled, and relevant data that reflects real-world usage?

- Is it centralized, or stuck in silos and spreadsheets?

AI readiness starts with data readiness.

Is your infrastructure flexible enough to support AI workloads?

You don’t need to rebuild your entire stack- but you need an architecture that can support experimentation, model deployment, and iteration.

This includes pipelines, versioning, observability, and the ability to roll back safely.

Are your teams aligned?

AI integration is not just a tech decision. It is a team sport.

Product, engineering, data science, and domain stakeholders all need a shared vocabulary and aligned expectations.

- Do your teams know how to measure it?

Do you have a feedback loop in place?

AI system learns. That only works if the loop is closed.

- Can we monitor, measure, and iterate on our AI decisions in production?

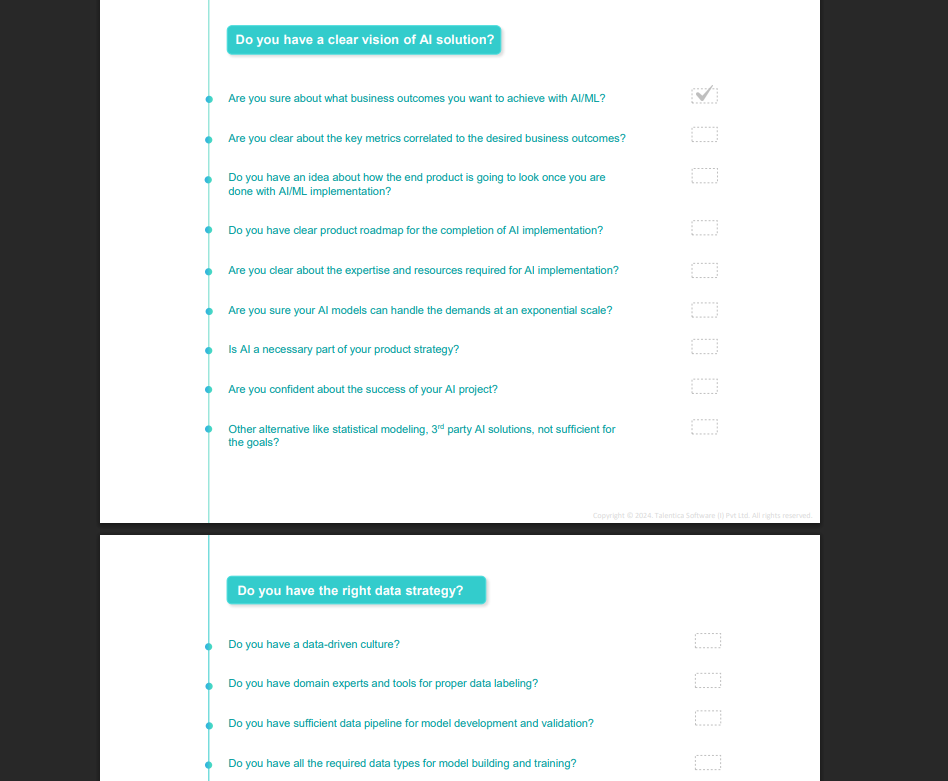

In our experience, teams that take time to assess AI readiness build systems that scale, evolve, and generate lasting impact. If you want to Check your AI readiness to make smarter decisions. You can download our free AI readiness checklist.

We have developed this checklist based on our past experience in developing AI solutions for VC-funded startups, high-growth tech companies, and mature Big Tech products.

Get the AI Readiness Checklist

In the next section, we will break down what “Integration” actually looks like.

5 steps to successful AI integration

We follow a 5 step approach that aligns engineering rigor with iterative experimentation.

Exploratory Data Analysis (EDA)

We begin with what matters most: your data. Noisy, sparse, or unlabeled data is a common blocker. Our data engineers:

- Build ingestion pipelines

- Clean, enrich, and label datasets

We also ensure observability is built into every data stream to ensure data isn’t just collected – Its actionable.

Modeling

We don’t just plug in LLM’s or neural nets because they are popular. We follow a model-agnostic approach- evaluate across families (e.g., tree-based, transformer, statistical) and then pick what works for:

- Your specific use-case

- Accuracy and latency constraints

- Interpretability and trust requirements

- Your infrastructure and scalability goals

Evaluation and interpretability

Accuracy isn’t enough. Trust matters. We don’t ship until the model earns human trust. Thats why we focus on evaluation and interpretability:

- Offline validation using cross-validation & stratified testing

- Online evaluation via A/B testing for real-world data reliability

- Explainability using SHAP AND LIME to interpret model behavior

- Confidence scoring to quantify prediction certainty

- Visual dashboards to make results transparent and actionable

Regulators want explainability. So do your end users.

Packaging and deployment

We deploy AI via APIs, message brokers ( like Kafka), or microservices that talk to your existing systems. Decoupling is key to maintainability and model lifecycle agility.

MLOps and monitoring

Production is the start, not the finish line. We embed MLOps from day one to support experimentation at scale. Our teams use tools like MLflow and Kubeflow to set up:

- Continuous training pipelines

- Version- controlled deployments

- CI/CD for models

- Drift monitoring and rollback

What’s deployed today won’t stay relevant forever. MLOps ensures continuous learning.

Common 7 mistakes to avoid

Over the years, we have observed a few recurring anti-patterns in AI integration.

| Mistakes | Why it’s costly | What it affects |

| Collecting “more” data instead of “good” data | Leads to biased or unusable outcomes | Model performance, accuracy, fairness |

| Choosing the wrong model | Can choke performance, increase latency, or inflate costs. | Performance, scalability, user trust |

| Ignoring offline evaluation | Without cross-validation, you risk deploying a model that only performs on training data. | Accuracy, business impact |

| Skipping online evaluation | Models that look good offline can fail in real-world behavior. No A/B testing = No guardrails. | Conversion rates, product metrics |

| No explainability layer | Slows adoption and raises compliance risk | Regulatory compliance, user trust |

| Deploying models without human feedback loops | Models reinforce bad behavior silently | Business logic alignment, adoption, high error rates and costly outcomes ( eg. Sending wrong offers, triggering wrong alerts). |

| Skipping model monitoring | Models degrade silently in dynamic environments. No observability = no awareness of drift, latency, or failure | Long-term model health, system reliability |

You can avoid these pitfalls by building explainability and traceability into the system from day one.

But among all the mistakes we have seen, there is one mistake that can derail AI integration efforts which is Not Having Right People in Team.

AI integration isn’t just about models and APIs. It is about translating complex algorithms into real-world software systems that work at scale. And that requires tight collaboration between data scientists, domain experts, and product engineering teams.

Teams that don’t bridge these roles early often fall into rework, misaligned expectations, and fragile integrations that never leave the lab. In our experience, the highest ROI comes from aligning AI talent with product engineering- not separating them.

If your AI team can’t ship or your engineering team can’t understand the models, integration will stall. Our advice? If you don’t have the right mix internally, don’t hesitate to bring in external experts. Outsourcing AI integration service can be a smart way to fill critical skill gaps, accelerate timelines, and reduce rework.

This isn’t nice-to-have — It’s multiplier.

Also Read: 3 Steps to Hire an Offshore Software Development Team

Tools and platforms used in AI Integration

Here, we have listed some of the primary tools and frameworks you can leverage to integrate AI in your business.

| MLOps | Airflow, MLflow, ZenML |

| Explainability | SHAP, LIME |

| Integration | Kafka, REST, APIs, FastAPI |

| Infra | Docker, Kubernetes |

| Libraries and framework | Scikit-learn, Pandas, NumPy, PyTorch, TensorFlow, Keras |

| Visualization | Matplotlib, Seaborn |

| GenAI Stack | LangChain, LangGraph, Crew.ai, etc. |

What does it cost to integrate AI?

The honest answer? It depends.

AI integration costs vary widely based on your use case, scale, data availability, your current infrastructure, and how deeply AI needs to be embedded.

Let’s understand this with scenarios:

- Scenario A: A SaaS startup already running on a modern cloud stack wants to add GenAI powered “smart search” feature. Most of the spending goes into finetuning a domain-specific model and writing it into their existing Kubernetes cluster. Because engineering pipelines are in place, the incremental cost stays lean and predictable.

- Scenario B: A mid-sized financial services firm aims to embed real-time credit risk scoring across multiple regions. They must unify customers and transaction data, comply with regional regulations, and build 24×7 model observability. Even without disclosing amounts, it’s clear their budget is dominated by data engineering, compliance automation, and high-availability infrastructure far beyond the cost of the model itself.

AI integration is not a single-line item. It is the sum of data readiness, engineering rigor, and operational maturity. Understanding where you stand on each dimension is the first step to budget wisely and avoiding surprises when the proof-of concept moves to production.

Measuring the ROI of AI integration

AI value is not just in the output- it is in the decision change it enables. Here’s how to approach ROI the right way:

Define success early

Ask

- What behavior are we trying to improve?

- What cost are we trying to reduce?

- What process are we trying to accelerate?

Don’t default to accuracy or precision unless your business truly runs on those metrics. Focus on outcomes, not model performance.

Example: For a lead scoring model, the goal is not just a better prediction- it is increasing sales team efficiency or boosting conversion rates.

Use tiered metrics- AI effectiveness vs. business impact

Split your measurement into two categories:

- AI performance metrics – precision, recall, latency, drift detection.

- Business KPIs– revenue per customer, churn reduction, manual effort saved, support ticket resolution, time, etc.

Your AI could be 97% accurate, but if it doesn’t move a business needle, it’s academic.

For example: A B2B SaaS based startup added AI-driven personalization, leading to a 15% increase in upsell conversations, not because the algorithm was “smart”, but because it nudged users at the right time with the right message.

Don’t skip the counterfactual

Always ask “What would happen if the AI wasn’t there?“

This helps isolate AI’s contribution from broader improvements like UI changes, or team growth.

A/B testing, phased rollouts, and shadow mode deployment are your friends here. They help tie AI inputs directly to outcomes.

Track the long tail

Many AI systems show early wins but degrade over time. Measuring ROI over a 6-month or 12-month horizon helps reveal:

- Model drift

- Process misalignment

- Underutilization by teams

Real ROI is a repeatable value, not a one-time lift.

If you can’t measure impact at the business layer, you are not done integrating AI- you are just playing with it.

Conclusion

AI integration isn’t a sprint. It is a carefully designed evolution of your product or platform. And it only delivers value when engineering, data, and business come together.

At Talentica, we don’t just integrate AI into products; we engineer intelligent systems end-to-end. With a team that spans Gen AI experts, classical ML specialists, and full-stack engineers, we help you go beyond proof-of-concept to build scalable, production-ready AI capabilities. From data pipelines to model tuning, we integrate AI into the fabric of your product- with precision, reliability, and speed.