The cracks in global healthcare systems are not new. But they are widening. Clinicians are bogged down, not only by patients, but by paperwork, coordination activities, and legacy processes that simply won’t die. The actual crisis? Time. Too little of it to provide care the way care needs to be provided. Agentic AI in healthcare is filling this void by acting as a catalyst for disrupting the way care is provided.

What is agentic AI?

Agentic AI refers to the models that have the ability to execute multi-step tasks independently and make decisions. They can schedule appointments, follow up with patients, and optimize processes independent of human intervention. A good example is Hippocratic AI, a Silicon Valley startup that raised $141 million in early 2025 and has become a unicorn. Its “AI agent app store” allows healthcare professionals to hire generative-AI-powered agents for routine, non-clinical tasks, such as chronic patient registration and post-hospital follow-up.

The automation of these back-office processes is rapidly emerging as a central driver of growth in healthcare. In fact, Grand View Research has recognized this as a sizeable market opportunity. Indeed, Mayo Clinic just tested VoiceCare AI agents (February 2025) to manage back-office processes, streamlining workflows and reducing errors.

In this blog, we dissect the anatomy of agentic AI in healthcare, explore how it works, and discuss the production challenges and solutions.

Understanding agentic AI anatomy

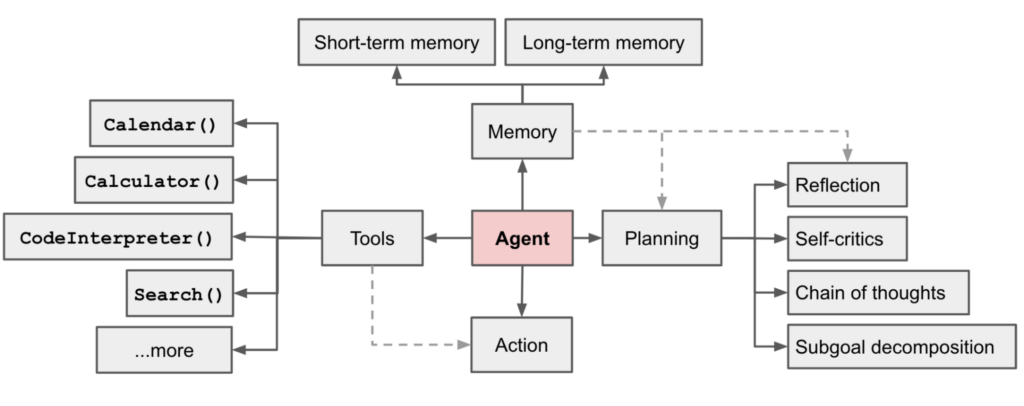

Agentic AI systems are composed of interdependent components which enable them to think, remember, execute actions, and dynamically interact with healthcare settings. Understanding its anatomy is important as it reveals why these agents are best suited for complicated and high-risk healthcare.

Tools

AI agents leverage external tools—APIs, databases, and even web sources—to gain access to real-time information that is outside of their training data.

In the case of healthcare, this may involve pulling the most current lab results from the EHR, checking insurance eligibility APIs, or accessing clinical guidelines.

Memory

Like human assistants, agents need memory to operate effectively. Scratchpad memory enables them to cache intermediate results—such as a patient’s vital signs or lab results halfway through a conversation—while long-term memory supports the tracking of historical background information, such as a patient’s chronic conditions, medication regimen, or previous communications.

Reasoner

At the heart of each agent is its reasoner. This is the part of an agent that enables agents to decompose complex tasks into manageable sub-steps—like evaluating symptoms, deciding on urgency, reviewing contraindications, and recommending next steps. In medicine, this translates into reasoning through subtle clinical pathways or prioritizing administrative clogs.

Actions

Agents then take actions on the basis of their environment and reasoning. This might involve sending a message to a patient, modifying the EHR, or referring a case to a specialist. Most agentic systems employ ReAct (Reasoning + Acting) loops whereby the agent reasons, does an action, perceives the result, and corrects accordingly—much like a medical resident learns through experience.

How do AI Agents in healthcare work?

To see how agentic AI operates in healthcare, let’s understand it with an example of a virtual care assistant handling chronic disease follow-ups within a big US city hospital.

Here’s how a day in the life of a virtual care assistant looks like

A type 2 diabetic patient is discharged following a short stay. The AI agent accesses the EHR system of the hospital (through tools) to get the patient’s glucose history, medications, and appointment records prior to discharge. The AI agent saves such key information in its scratchpad (short-term memory) and recalls relevant past interactions from long-term memory—such as the patient’s past non-adherence to metformin.

When the patient is back at home, the agent activates a structured follow-up workflow. It thinks through the task step by step: first, verify pickup of medications; second, check for early warning signs; third, make a follow-up appointment with the endocrinologist. Employing a ReAct-like method, it sends a check-in SMS (action), waits for a response from the patient, then modulates next steps depending on symptoms or issues.

When the patient reports dizziness, the agent matches present symptoms against past records, crossmatches with known side effects, and alerts a nurse—triaging the case prior to its escalation. It then suggests rescheduling the follow-up appointment earlier and notifies the care team with a note automatically generated into the EHR.

All this occurs with minimum human intervention.

Here, the AI isn’t merely conducting one task—it’s working as a semi-autonomous teammate: detecting new information, thinking through next actions, acting on choices, and getting better with time through feedback. That’s agentic AI in healthcare in a nutshell- less about replacing the clinicians, and more about providing them with the time and tools to care better.

Key Use cases of AI agents in healthcare

Patient–facing services are the most common use cases of agentic ai in healthcare. AI-powered agentic virtual health concierges are able to triage symptoms, remind patients of medications, clarify discharge summaries, and check in on care plans — all while efficiently escalating concerns to clinicians when necessary. Agents ensure continuity and personalization without overwhelming staff. Like we saw in the above example.

Another arena for agentic AI is in the drug discovery pipeline. Drug development is notoriously time-consuming and expensive – an average of 10 years and $2.6 billion per new drug – so even small AI accelerations can save years. For example, in March 2025 biotechnology analytics company Causaly launched Discover, an “end-to-end” agentic AI drug discovery platform. Discover autonomously synthesizes vast biomedical data, reasons over research questions, and formulates hypotheses, saving bench-to-bedside time.

Similarly, Google DeepMind’s new TxGemma initiative enables developers to write bespoke AI models for a particular drug R&D task (target validation, ADME prediction, etc.) and even link them into agentic workflows. In February 2025, Google also announced an “AI Co-Scientist”, a multi-agent system based on the Gemini 2.0 model that can autonomously develop hypotheses, devise experiments, and iteratively optimize them. This Co-Scientist employs specialized agents (generation, reflection, ranking, etc.) to simulate the scientific process; it can do in days what would take human scientists’ months or years.

Collectively these innovations project a future in which AI analysts and researchers will be less “data grunt” and more “AI partner,” jointly creating experiments and findings through agentic systems.

Examples like these are inspiring, but getting from “” it works in a test room “” to “” it works in production”” is where things break.

Moving from pilot to production introduces a new set of challenges- technical, organizational, and regulatory. Hospitals want more than a working demo- they need reliable uptime response speed, audit trails, and integration with existing clinical workflows. The gap between early experimentation and enterprise deployment is where many promising initiatives stall.

Production challenges of agentic AI in healthcare and their solutions

Bringing agentic ai solutions into production can present several challenges:

Scale

Managing a growing ecosystem of agents, each with its own role, memory, and tools, demands careful orchestration. Collaboration becomes more complicated as work crosses departments and systems.

Various frameworks offer scalable solutions — for example, Llamaindex takes event-driven workflow to manage multi-agents at scale.

Latency

In contrast to single-shot models, agentic systems operate iteratively. One single task may require multiple steps of reasoning and LLM calls, each adding delay. Such latency is particularly vexing in real-time healthcare applications.

Managed LLMs such as GPT-4o, are slow because of implicit guardrails and network delays. Self-hosted LLMs (with GPU control) come in handy in solving latency issues.

Performance and hallucination issues

LLMs are probabilistic by nature. This implies that an agent’s behavior may vary on different runs, resulting in non-deterministic outputs. For any clinical application, this variability is unacceptable.

Using techniques like output templating (e.g., JSON format) and prompt engineering methods (e.g., few-shot examples) can help reduce response variability. Counteracting hallucination—where agents make up facts or misinterpret context—usually involves further fine-tuning, retrieval-augmented generation (RAG), and ongoing evaluation loops.

Why choosing the right AI development partners matters

Building agentic AI systems isn’t just about choosing the right model — it’s about making hard engineering tradeoffs at every layer: prompt design, orchestration logic, infrastructure, feedback loops, UI, compliance. Most teams hit a wall not because they lack ideas, but because they lack AI-native engineers — developers who think in tokens and latency budgets, who understand how agents behave under load, and who know when to fine-tune vs when to simplify.

Off-the-shelf frameworks help, but real-world deployments often demand custom glue code, failover handling, and deep integrations with existing systems like EHRs, LIMS, and payer portals. You need a team that understands both healthcare context, its regulatory environments (HIPAA, FDA pathways, and data interoperability standards (like FHIR)) and agent dynamics.

Outsourcing this work to a right AI development service partner doesn’t mean handing it off blindly. It means collaborating with a partner who brings architecture foresight, rapid experimentation cycles, and production-grade maturity. One that’s walked the edge from lab demos to in-clinic pilots — and knows where it breaks.

What’s next for agentic AI in healthcare

Agentic AI is still early, but it’s growing fast. Today’s implementations are automating documentation, streamlining triage, and accelerating R&D. Tomorrow’s agents could manage care plans, coordinate multi-specialty treatments, and even function as clinical copilots.

We are heading towards a future where agentic AI systems will not only help but also foretell. Advances in memory, tool use, and reasoning are making way for persistent agents that monitor patient history over encounters, learn from feedback, and adapt their behavior.

A glimpse into what’s coming

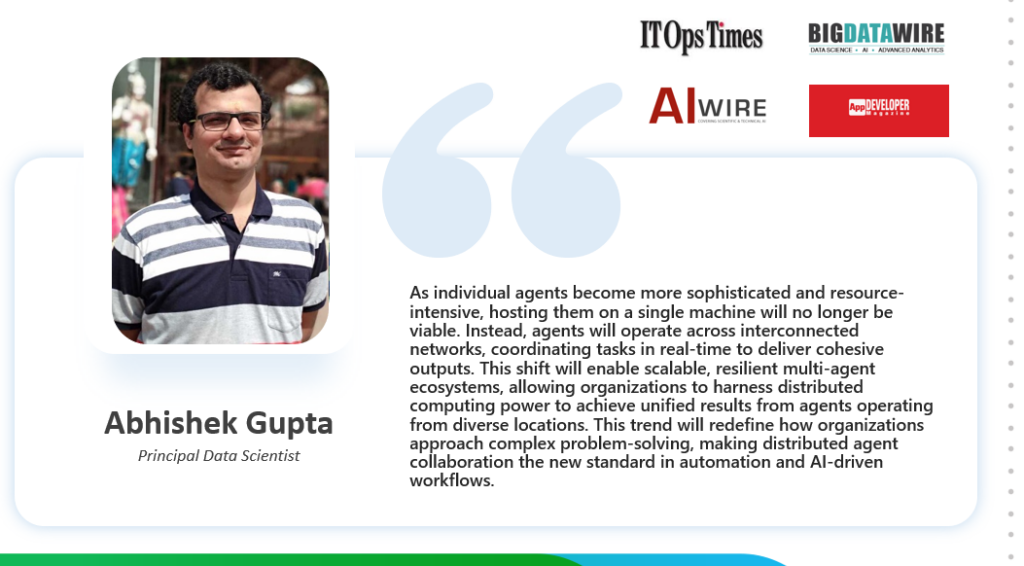

As multi-agent systems evolve, our Principal Data Scientist, Abhishek Gupta, predicts that the growth of multi agent apps will drive the adoption of distributed frameworks. Advancements in LLM reasoning and agent-driven ML solutions will enable scalable, autonomous workflows across industries.

For the health care sector, this change has revolutionary potential. Picture care teams augmented by agents that work not only within a hospital but across a network of providers, payers, and labs—securely sharing insights, sorting patients, and coordinating treatment across systems in real time. Distributed multi-agent ecosystems will make this type of intelligent, collaborative automation the norm, not the exception.

As these frameworks become available, healthcare organizations that adopt agent-native design now will be much better placed to provide personalized, proactive, and scalable care in the future.

Conclusion

Agentic AI in healthcare is not a distant vision, its already reshaping diagnostics, patient engagement, drug discovery, and operations. But turning this potential into production-ready solutions demands more than off-the –shelf tech. It calls for AI-native engineers, deep healthcare context, and a mindset tuned to experimentation, scale, and safety.

If you are interested in how agentic AI can enhance your healthcare workflows or if you wish to build secure, scalable applications with tangible impact, let’s discuss.